Below you will find pages that utilize the taxonomy term “Large-Models”

April 27, 2025

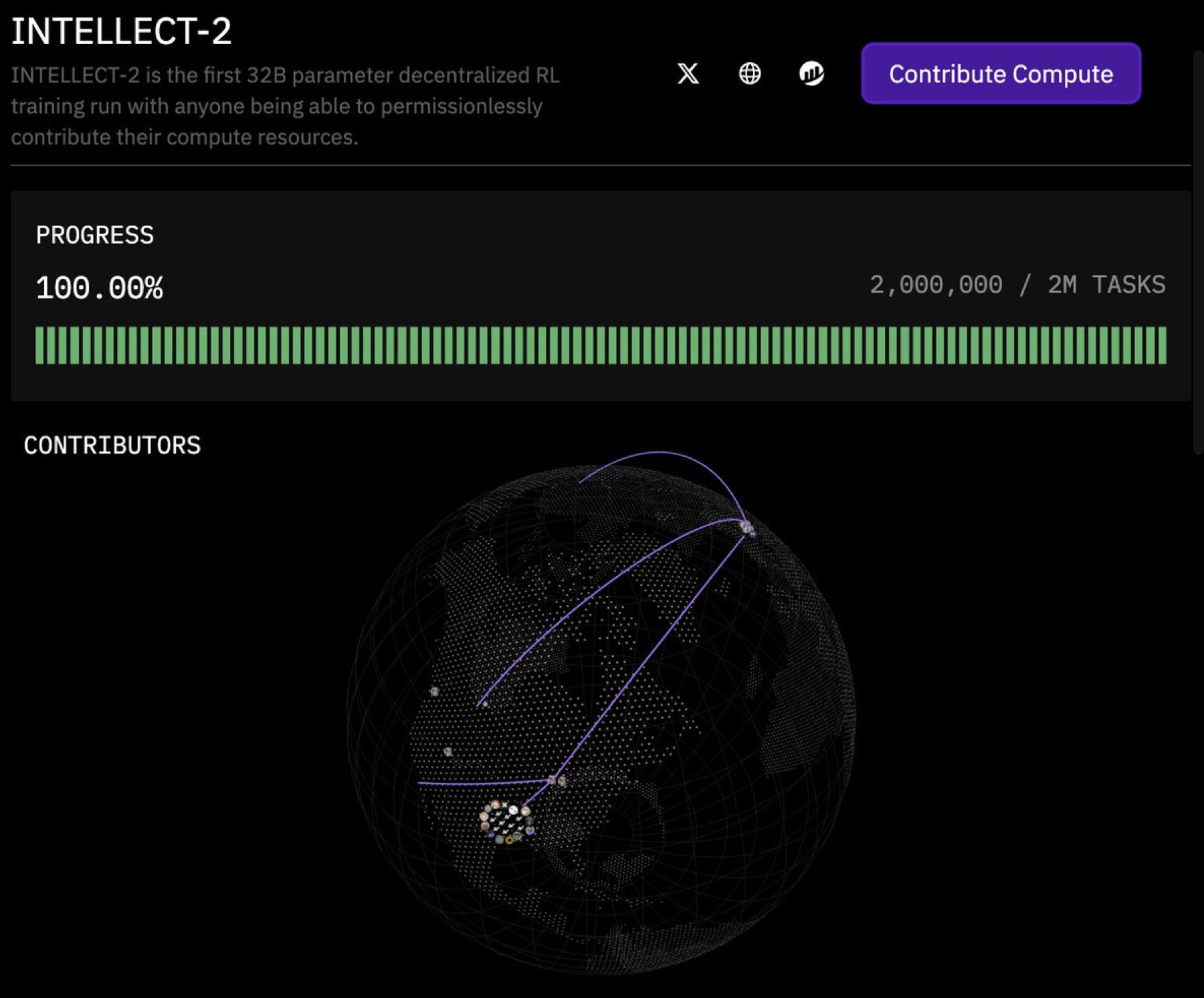

Intellect-2: First Decentralized 32B RL Training Complete

Prime Intellect (@PrimeIntellect) announced the completion of INTELLECT-2, the first decentralized Reinforcement Learning (RL) training run for a 32-billion-parameter model.

Key Points:

- Milestone: This marks the first successful decentralized RL training of a 32B model.

- Open Collaboration: The training was open to compute contributions from anyone, making it fully permissionless.

- Goal: The project aims to scale towards frontier reasoning capabilities in areas like coding, math, and science.

- Upcoming Release: A full open-source release, including model checkpoints, training data, and a detailed technical report, is expected approximately one week after the announcement (made around late August 2024).

- Community Effort: The announcement highlighted the significant contributions from various compute providers, including Demetercompute, string, BioProtocol, mev_pete, plaintext_cap, skre_0, oldmankotaro, plabs, ibuyrugs, 0xfr, marloXBT, herb0x_, mo, toptickcrypto, cannopo, samsja19, jackminong, and primeprimeint1234.

Links: